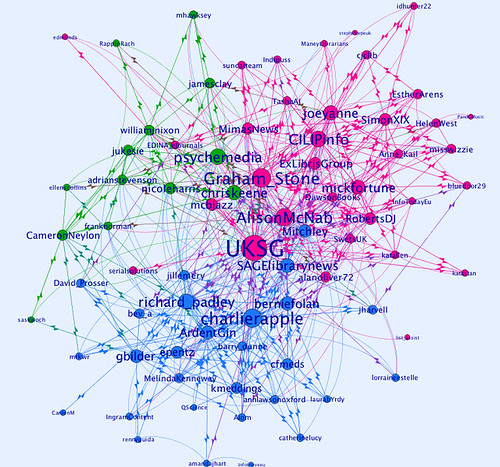

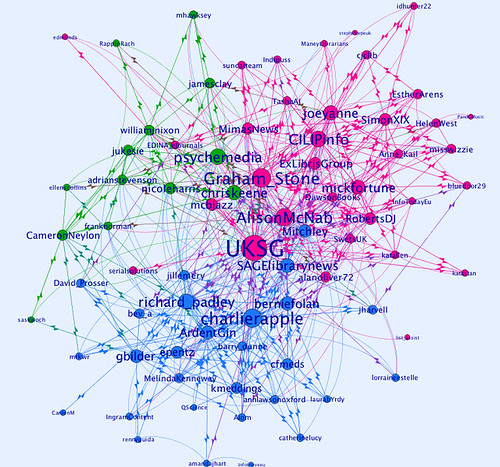

The UKSG Echochamber

Labels: Conference, flickr, hashtag, twitter, uksg

Labels: Conference, flickr, hashtag, twitter, uksg

Vic Lyte and Sophia Jones from Mimas, The University of Manchester, presented on The UK Institutional Repository Search (IRS), which is a Mimas project commissioned by JISC in partnership with UKOLN and SHERPA. The project was completed in July 2009 and the service has been running continuously since then.

Content stored in institutional and academic repositories is growing and they recognise that there are limited ways to access this information. This project has taken cross-search and aggregation to the next level, creating a visionary platform that pulls together disparate content, making it easier to search and discover in ways that meet personal or contextual needs.

They demonstrated how the search works including an impressive 3D visulisation option.

They gave an overview of the JISC Historic Books and JISC Journal Archives products. They also talked about the JISC Collections e-platform enabling cross-agregated search of a unique resource from the British Library. Content (300,000 books) previously inaccessible will be searchable on the platform. Features include three types of search (exact; detailed and serendipitous) and tabbed filters; Google-style listings and search clouds. It all looked very impressive.Labels: breakout session 18

Sarah Pearson from the University of Birmingham kicked off this breakout session and outlined her experience of collection development analysis at her institution. She went on to explain that while they have been doing this for some time, usage alone doesn't tell the whole story. They have been looking increasingly at how users get access to content and what path they take.

Sarah highlighted the numerous ways they promote usage at her university. These include news feeds about new acquisitions and trials; making content available in resource discovery interfaces; activating in link resolvers (SFX); integrating with Google Scholar/A&I services; making authentication as seamless as possible and embedding in apps on other sites.

There is a Mylibrary tab on the institutions' portal page and a library news section, which are widely used. Users can search the library catalogue direct from the portal page of the university rather than go to the library pages.

They are also about to user Super Search on Primo Central, which will be embedded in the virtual learning environment and Facebook.

To analyse usage they use a number of services including in-house templates that compare and contrast big deal usage with subscription analysis; JUSP (Jisc) and SCONUL Returns. They look at JR1 reports and evaluate cost per use. They pay particular attention to those resources with low or zero use. They also look at DB1 searches & sessions and compare archive with frontfile usage.

With budgets under threat librarians are looking at cancelling poorly performing content and big deals, for example, have to demonstrate overall good value.

The University of Birmingham approach, Sarah explained, is to activate online access content everywhere and let the user decide.

Google Analytics is being used to look at user behaviour now and to help understand more about their journey to access content. They know that the Institution's portal page is the number one access point but the OPAC and Google are still high referrer sites. There is a low number for access via mobile devices but they expect that to increase.

Evaluating usage is still very manual and it is labour intensive to measure the ROI of resources. It is important with increased pressure on budgets to ensure librarians are making the right decisions about which content to subscribe to and purchase. Evaluating usage is an important step in doing this.

Christian Box from IOP Publishing followed on with an interesting presentation about the work they are currently doing at the Institute of Physics. By sharing data between publishers and librarians, he said, we can make the industry more efficient.

I was particularly interested to hear more about the video abstracts they launched in February this year. Authors can now submit video abstracts and so far they have had over 10,000 video views. The human factor is important in engaging with students and researchers and helps to humanise the journal by conveying the inspiration and enthusiam of the author or editor.

Publishers can learn a lot from evaluating the data they have such as seeing which research areas are growing. Web analytics; train of thought analysis; traffic dashboards including social media indexes and extended metrics such as A&I services are all important.

Platform development and ensuring connectedness is key. SEO is still vitally important here.

Social Networking/Media activity and how it impacts on usage is difficult to track. Physics World has 8,510 follows on Twitter.

Local language sites (Japan; Latin America and China) have moderate but growing traffic so far.

Access via mobile devices including iphones and ipads is growing and publishers need to operate in this space to ensure users can access content wherever they are.

Challenges for publishers and librarians alike include creating new and meaningful metrics to cope with the rate of industry change; niche areas of resarch and primtive metrics.

As Christian stated at the beginning of his presentation, it is important for librarians and publishers to work together as much as possible and share data to increase efficiency wherever possible.

On day one of the UKSG 2011 Conference, John Naughton (The Open University and Cambridge University Library) paraphrased William Gibson, 'The future has already arrived...stop trying to predict it.'

'We are living through a revolution and we have no idea where it is going,' he suggested. He used the term 'information bewilderment' to explain further.

Capitalism, he argued, relies on the creative destruction of industries in waves of activity. This is exciting for those on the creative side but scary for those on the destructive (ie newspaper and music industries) side.

Obsolete business models are at threat and everyone at the conference is affected, he warned. In the digital age, 'disruptive innovation' is a feature and a way of cutting out the 'middle man' to create profit.

He cited Amazon Kindle Singles as an example, whereby they invite authors (previously published or unpublished) to publish shorter articles (longer than a magazine or journal article but shorter than a novel) as an e-book on the Amazon Kindle platform.

Prediction is futile but you can measure changes. Complexity is the new reality and the rise and rise of user-generated content offers numerous opportunites for end users to 'cut out the middle man' (ie publishers).

In the old ecosystem there were big corporations while the new ecosystem relies on everything being available in smaller chunks on content (tracks not albums, articles not journals etc).

What's it got to do with libraries?

There is an intrinsic belief that libraries and librarians do good work but a wave of 'creative disruption' doesn't care. Libraries have traditionally taken a physical form and one of the debates has been about how to maintain the idea of a 'library' when users are increasingly accessing content online. When all academic activity takes place in a digital environment (soon?) how will libraries justify their existence (from place to space)?

John Naugthon ended his presentation by suggesting librarians could add value by building services around workflows (social media; rss feeds etc) as the everyday avalanche of data crys out for the skills of the librarian to create order.

'The best way to predict the future is to invent it.'

Sounds like good advice for those of us in publishing too.

Labels: Plenary session 1

Labels: "open data", #uksg, linked data, uri

Labels: open access, uksg

Labels: #uksg, mobile services, technology

Labels: Author, Identification, orcid, uksg

Labels: consequences, economy, emergence, he, innovation, knowledge, postgraduate, uksg, unintended

Rufus Pollock from Open Knowledge Foundation tells us how metadata can and will be more open in the future, and why we should care.

Libraries and publishing used to be mainly about reproduction of the printed word. Access and storage also but reproduction mainly which once upon a time reproduction was very costly; people needed to club together and form societies in order to afford reproduction.

Now we're matching, filtering and finding, but there's too much info and every password you have to enter slows you down, and slows down innovation and innovators. Matching is king in a world of too much info - Google's aim is to match people with information and it all relies on humans making the links and building sites. Imagine if they'd had to ask permission of every single person - we would have missed out on something big.

Of course people have to be paid, machines have to run etc. BUT much of this production is already paid for i.e. via academia itself: instead of using the same few favourite books, why not ask friends? Or create our own journals?

Data and content are not commodities to sell but platforms to build on... there are plenty of ways to make money without going closed (although it might be different people making the money of course!)

And why does metadata matter so much? It's the easy way in; everything attaches to it: purchasing services; wikipedia; analytics such as who wrote it, how many people bought it etc.

Data is like code and so the level of re-use, and the number of applications we can create is huge.

One such project is JISC OpenBib which has three million open records provided by the British Library. It integrates with wikipedia, and includes a distributed social bibliography platform so that users can contribute and correct and enhance. We need to harness the users to help us make much better catalogues, to enrich catalog data.

So metatada is the skeleton and right now we have the chance to make a significant change for the better. Metadata and content WILL all be free one day... it may take some time but it will happen. The day is coming when there won't be a choice. There will be enough people with open data to make it happen.

Labels: analysis, database, ebooks, model, package, pda, purchasing, uksg

This section of the conference allowed librarians and publishers to hear directly from scientific researchers; first up is Philip Bourne from University of San Diego who is a computational biologist among other things (e.g. open access advocate).

Bourne starts by explaining his big hope for scientists’ relationship with publishers in the future:

“as a scientist I want an interaction with a publisher that does not begin when the scientific process ends but begins at the beginning of the scientific process itself”

The current situation is:

But why couldn't the publisher come in at the data stage? They could help store it for our group. Or even earlier, at the ideation stage: The moment I jot down a few ideas, the publisher could control access to that information and then at some point down the line when the access is opened up – that’s when it becomes ‘published’.

There are movements in that direction. For example in Elsevier’s ScienceDirect (and some others too) you can click on a figure/image and move it around and manipulate it – the application is integrated on the platform because a publisher and a data provider has cooperated. But this is just the beginning; when you click on the diagram in the article, you’re getting some data back but it’s generic and it might not be organised in the way that you want. It’s generic – the figure is being viewed separately from the article text and related data – now you have to figure out what that metadata means to the article. So this is a good step but it’s not capturing all of the knowledge that you might want. It needs more cooperation, more open and interactive apps. And it needs:

So Bourne wants publishers to become more involved with his work as – he confesses – some of the work is less than organised. He thinks scientists need help with management of data in general, and specifically:

Solutions?

Bourne’s ‘Beyond The PDF’ workshop has generated discussion and ideas. He says “the notion of a journal is just dead – sorry. The concept of a journal is lost to me; its components and objects and data are what I think about. Research articles are useful but the components could be seen as a nanopublication.”

We need more:

Microsoft are looking at some of these things already and Bourn’es group has written plugins for Word – e.g. as you type you auto-check various ontologies that may suggest you change a common name to a standard name. You can tag that at the point of authoring.

All of this is not yet a huge success but it’s coming. Right now there is not much incentive but if publishers can help fast-track the development of these applications then authors will start using them. There’s no use talking about it but it’s only on researchers’ radar when they see science done in a way where this process has made a difference. For example Bourne’s group is running a test to look at spinal muscular atrophy (designated by the NIH as treatable). They will coalesce a set of disparate tools, engage the publishers (Elsevier have opened up ths), in order to address a specific problem that could change lives.

If this works then that would get the kind of attention that scientists would take notice. Only when they see thus process succeeding will they start adopting it. The tipping point will come when the tenure ‘reward system’ starts to change for the next generation, the way science is researched will improve.

Labels: data, future, internet, libraries, science, uksg, value